Tail Call Optimization in Ruby: Deep Dive

In my last post, I began an exploration of tail call optimization in Ruby with some background on tail call optimization and its little known existence and usage in Ruby. In this post, we'll continue that exploration at a much lower level, moving out of the Ruby layer and descending to whatever depths are necessary to get to the bottom of how the Ruby VM implements tail call optimization internally.

A lot of what follows wouldn't be possible without Pat Shaughnessy's Ruby Under a Microscope (and a healthy dose of K & R). If you find you enjoy the conceptual level of this article and you're interested in more, I'd highly recommend Ruby Under a Microscope. I found it an enjoyable, empowering, fascinating, and approachable introduction to the internals of Ruby. If you're curious about the book, but you're still unsure about it, I'd encourage you to check out Ruby Rogues #146, a book club episode featuring Ruby Under a Microscope with guest appearances by the author, Pat Shaughnessy, and Aaron Patterson of Ruby and Rails fame, and who also wrote the foreword of the book. It's an enjoyable episode that definitely helped guide my decision to read the book.

So, getting on to the subject of today's post. Hold on to your butts.

Revisiting our tail recursive Guinea pig

In my last post, we discovered a tail recursive function in the Ruby test suite, which we extracted (with a few tweaks) to demonstrate tail call optimization in Ruby. We'll need our Guinea pig again for today's exercise, so allow me to introduce her one more time:

code = <<-CODE

class Factorial

def self.fact_helper(n, res)

n == 1 ? res : fact_helper(n - 1, n * res)

end

def self.fact(n)

fact_helper(n, 1)

end

end

CODE

options = {

tailcall_optimization: true,

trace_instruction: false,

}

RubyVM::InstructionSequence.new(code, nil, nil, nil, options).eval

I won't go into the details again, but suffice it to say that this code snippet will add a Factorial class with a tail call optimized fact method to our environment. Our journey begins with this class method.

Initial descent

With our tail recursive Guinea pig revived, we can begin our descent into the internals of Ruby's implementation of tail call optimization. A month ago I wouldn't have known where to begin such a quest, but this is where some of the background and methods employed in Ruby Under a Microscope will be of great utility.

One method that Ruby Under a Microscope uses to great effect is using RubyVM::InstructionSequence#disasm to disassemble Ruby code into the underlying YARV instructions that the Ruby VM will actually execute at runtime. Using this technique we should be able to disassemble both a tail call optimized version and an unoptimized version of our Factorial#fact method and compare the instruction sequences for differences.

Before we continue, let's rewind for a second and discuss YARV. YARV, which stands for Yet Another Ruby Virtual Machine, is a stack-oriented VM internal to Ruby that is responsible for compiling your Ruby code into low-level bytecode instructions (called YARV instructions) and executing those instructions. YARV was introduced in Ruby 1.9 to improve performance over Ruby 1.8's direct traversal and interpretation of the Abstract Syntax Tree generated by parsing a Ruby program. For more insight into on how Ruby executes your code, you can check out an excerpt from Ruby Under a Microscope, How Ruby Executes Your Code by Pat Shaughnessy.

Back to our regularly scheduled broadcast.

To facilitate comparing the YARV instructions of the tail call optimized and unoptimized versions of our factorial function, I've tweaked our Guinea pig script to disassemble both versions of the function and puts them to STDOUT. Here's the resulting script:

code = <<-CODE

class Factorial

def self.fact_helper(n, res)

n == 1 ? res : fact_helper(n - 1, n * res)

end

def self.fact(n)

fact_helper(n, 1)

end

end

CODE

{

'unoptimized' => { :tailcall_optimization => false, :trace_instruction => false },

'tail call optimized' => { :tailcall_optimization => true, :trace_instruction => false },

}.each do |identifier, compile_options|

instruction_sequence = RubyVM::InstructionSequence.new(code, nil, nil, nil, compile_options)

puts "#{identifier}:\n#{instruction_sequence.disasm}"

end

There are two things here worth making note of. First, I've chosen to disable the trace instruction for both versions to avoid unnecessary differences between the two instruction sequences that don't actually pertain to how Ruby implements tail call optimization internally. Second, though it is not explicit in this script, I am running MRI Ruby 2.2.0 locally, so all of the YARV instructions and C code that we'll look at are specific to MRI Ruby 2.2.0 and may be different from other versions. You can view the YARV instructions of the unoptimized Factorial class here and the YARV instructions of the tail call optimized Factorial class here.

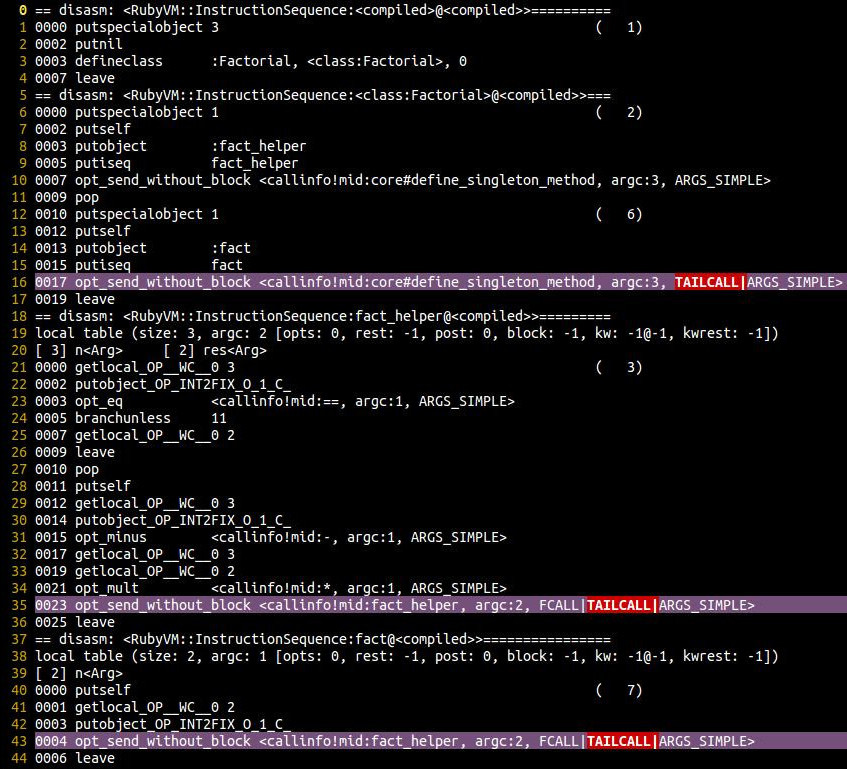

A vimdiff of the two instruction sequences with changed lines highlighted in purple and the actual changes highlighted in red looks like so:

Oh no! Disaster! It seems that our initial descent is some what of a failure. Other than the addition of a TAILCALL flag to a few of the opt_send_without_block instructions, the YARV instructions for both the unoptimized version and the tail call optimized version are exactly the same. What gives?

From here it seems like our only logical course of action is to descend even further and look at the C code that makes up those YARV instructions with the hope that the TAILCALL flag is really all that's needed to transform an unoptimized call into a tail call optimized call.

Descending into the C

We begin our journey into Ruby's C internals where our YARV instructions left us, with the opt_send_without_block instruction. Hopefully, we can find something in the implementation of that instruction that will help us find our way to where Ruby implements tail call optimization internally.

As discussed in Ruby Under a Microscope, the definitions that are used during the Ruby build process to generate the C code for all the YARV instructions live in the Ruby source in insns.def. With a little grepping, we can find the definition of opt_send_without_block around line 1047 of insns.def:

DEFINE_INSN

opt_send_without_block

(CALL_INFO ci)

(...)

(VALUE val) // inc += -ci->orig_argc;

{

ci->argc = ci->orig_argc;

vm_search_method(ci, ci->recv = TOPN(ci->argc));

CALL_METHOD(ci);

}

As you've almost certainly noticed, this isn't quite C. Rather, during the Ruby build process this definition is used to generate the actual C code for the opt_send_without_block instruction. You can view the fully generated C code for opt_send_without_block in all its monstrous glory here.

Luckily, for our purposes, we don't have to go quite to that extreme and can operate at the instruction definition level. One mutation I will make before we continue is to expand the CALL_METHOD macro and remove some noise added to facilitate the macro. That brings us to the following:

ci->argc = ci->orig_argc;

vm_search_method(ci, ci->recv = TOPN(ci->argc));

VALUE v = (*(ci)->call)(th, GET_CFP(), (ci));

if (v == Qundef) {

RESTORE_REGS();

NEXT_INSN();

}

else {

val = v;

}

OK, so what in the name of Neptune is going on here? Well, the first thing to notice is there's no sign of tail call optimization here, so the question for now is, where to next?

In this case, the ci variable is of most interest to our particular quest. The ci variable references a rb_call_info_t struct which encapsulates a variety of data about a method call including, among other things, the receiver of the call, how many arguments the call takes, and a reference to the C function that should actually be executed by the call. It's this final reference, ci->call, that we're most interested in, as we hope to find some trace of tail call optimization therein.

From the code above we can ascertain that when the Ruby VM executes a method call, it invokes the function pointed to by the rb_call_info_t struct's call field with the current thread (th), the current frame pointer (result of GET_CFP), and the rb_call_info_t struct itself (ci) for arguments.

This is definitely a step in the right direction, but since we have no insight into the origins of the function pointed to by the rb_call_info_t struct's call pointer, we'll need to step backward before we can step forward. Luckily for us, we literally only need to take one step backward to the previous line where vm_search_method is invoked.

At this point, rather than drill into every call that is made on the way to our goal, let's speed things up a bit. We'll still walk through each step, but we'll be more brief and skip the code snippets until we get a whiff of tail call optimization. That said, I've collected the source for each step of the way from CALL_METHOD to the internals of Ruby's tail call optimization into one file for your viewing pleasure.

Take a deep breath…

The call to

vm_search_methodis where the value ofci->callis set, and it is set to reference another function,vm_call_general.vm_call_generalwhen called invokes and returns the result of another method,vm_call_method.vm_call_methodat about 155 lines, is a monster of a function, that handles every type of method invocation that the Ruby VM supports. It'd be pretty easy to get lost in this method, but we are fortunate in that we know we are dealing with an instruction sequence method type because we got to this point from a YARV instruction. This allows us to jump right to the portion of the switch statement that deals with instruction sequence type methods. In which case,vm_call_methodreturns the result of yet another function invocationvm_call_iseq_setup.

(If you're beginning to wonder if this rabbit hole of a descent has a bottom, don't worry, we're almost there.)

vm_call_iseq_setupis a two-liner that sets up the callee of the method and then returns the result of another function invocation,vm_call_iseq_setup_2.vm_call_iseq_setup_2is where we finally get our first whiff of tail call optimization. In fact, the only purpose ofvm_call_iseq_setup_2is to check if tail call optimization is enabled and if so it calls yet another function,vm_call_iseq_setup_tailcall.

(So close! But, while we're here, it's worth noting that normally when tail call optimization is not enabled, vm_call_iseq_setup_2 will call vm_call_iseq_setup_normal instead of vm_call_iseq_setup_tailcall. We'll come back to this alternative path in a moment.)

- One look at

vm_call_iseq_setup_tailcalland it's obvious that we've found what we've been searching for, the heart of Ruby's support for tail call optimization.

Success! Well, sort of, we still need to grok what's going on here, and come to think of it, where the hell are we? Let's take a look at what's going on inside vm_call_iseq_setup_tailcall and see if we can find our bearings and see how this call translates into the goodness of tail call optimization.

Just when you were starting to think it was turtles all the way down

Though we could consider vm_call_iseq_setup_tailcall on its own, we would probably do better to use the same strategy that we employed earlier and compare the unoptimized version to the tail call optimized version, and see what is different between the two. It didn't work for us last time, but maybe we'll have better luck this time around.

We've established that the tail optimized version can be found in vm_call_iseq_setup_tailcall, and if it wasn't obvious from its name or from my making a point of mentioning it during our descent, the unoptimized version can be found in vm_call_iseq_setup_normal. Looking at both methods at a high level, it looks like we're still in the process of making the method call, as both of these functions seem to be preparing Ruby's internal stack prior to pushing a new frame onto the call stack.

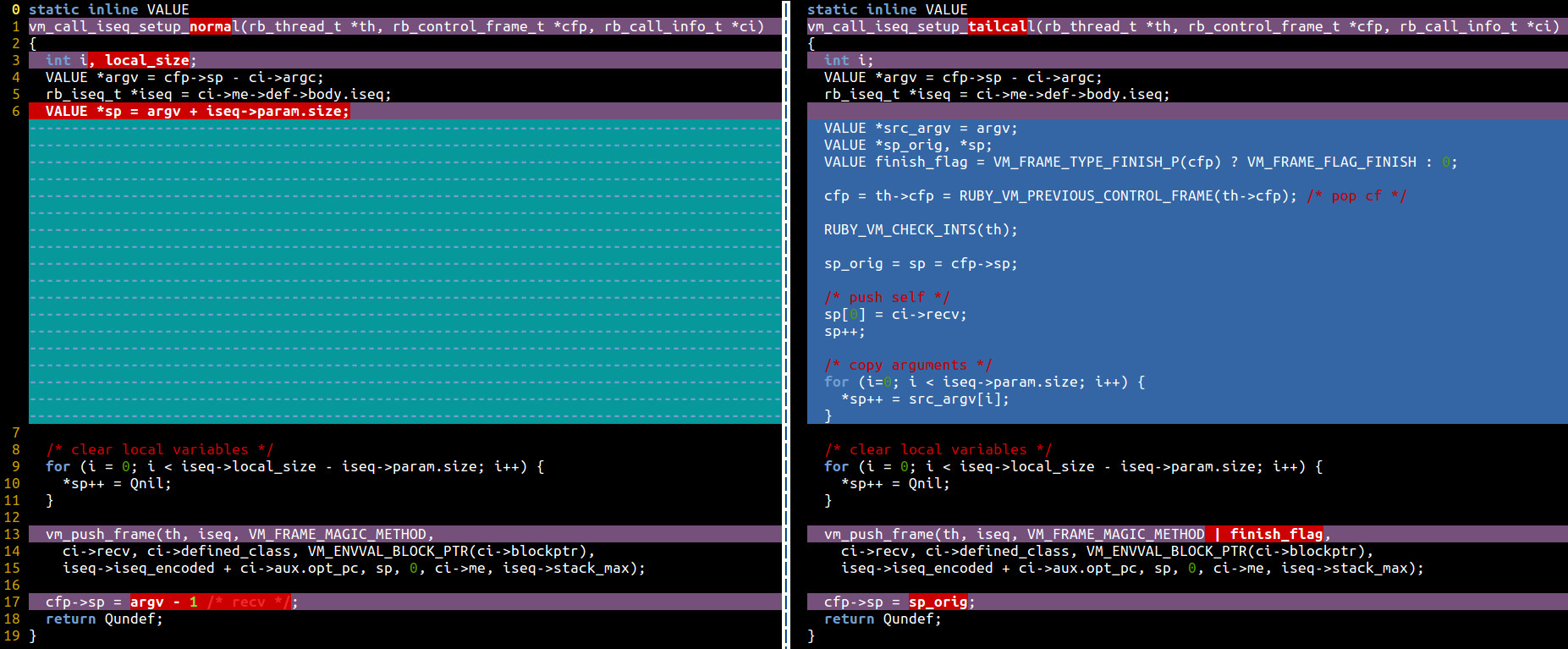

Here's a side-by-side vimdiff highlighting the differences between the two functions, though I should warn you that I made a couple of minor adjustments to vm_call_iseq_setup_normal to suppress irrelevant differences:

Compared to the extremely minimal differences in the our initial diff, I'm much more optimistic that we'll find what we're looking for in this larger change set. Let's start with vm_call_iseq_setup_normal since it is the shorter and more typical of the two functions.

vm_call_iseq_setup_normal

VALUE *argv = cfp->sp - ci->argc;

vm_call_iseq_setup_normal begins by creating a pointer to the position on the stack where the argument vector (argv) for the next iteration of the recursive call begins. This is achieved by taking the current stack frame's stack pointer (cfp->sp) and moving backward down the stack the appropriate number of elements, as determined by our old friend the call info struct (rb_call_info_t) and its argument count field (ci->argc).

rb_iseq_t *iseq = ci->me->def->body.iseq;

vm_call_iseq_setup_normal then continues by creating a pointer to the rb_iseq_t struct identifying and encapsulating data about the instruction sequence that will be invoked by this call.

VALUE *sp = argv + iseq->param.size;

vm_call_iseq_setup_normal next creates a new pointer (sp) and points it to where it calculates the end of the argument vector (argv) to be using the value returned by iseq->param.size, a field related to the instruction sequence indicating how many parameters the instruction sequence takes.

It may seem strange that the VM determines the beginning of argv by descending ci->argc elements from the top of the stack and then later finds the end of argv by ascending iseq->param.size elements up the stack, however the use of iseq->param.size allows the VM to allocate extra space on the stack in situations that use special types of arguments. In this case however, our Guinea pig function uses only simple arguments so ci->argc and iseq->param.size are equal. This brings us right back to where we started at the top of the stack.

for (i = 0; i < iseq->local_size - iseq->param.size; i++) {

*sp++ = Qnil;

}

This next segment is responsible for allocating and clearing out space on the stack for local variables and special variables that will be required to execute the method call. In this case, our Guinea pig function doesn't use any local variables so no space is needed for those, but the VM does need to allocate a spot on the stack for special variables. That said, though the VM allocates a spot on the stack for special variables, our function doesn't actually use any of Ruby's special variables1, so that spot on the stack will remain nil.

vm_push_frame(th, iseq, VM_FRAME_MAGIC_METHOD,

ci->recv, ci->defined_class, VM_ENVVAL_BLOCK_PTR(ci->blockptr),

iseq->iseq_encoded + ci->aux.opt_pc, sp, 0, ci->me, iseq->stack_max);

For our particular intentions we don't need to get into the nitty-gritty details of this function invocation, but suffice it to say this call is responsible for pushing a new frame on to the stack for executing the method call. This new frame is the next iteration of our recursive function.

cfp->sp = argv - 1 /* recv */;

This last bit of logic sets the current frame's stack pointer (cfp->sp) to point to the position on the stack just before the beginning of the argument vector (argv - 1). When this line is executed, that position on the stack is occupied by the receiver of the next iteration of our function call. This may seem a little strange, but this assignment is preparing the current stack frame for when it resumes execution after the completion of the frame we've just pushed on to the stack. When the current frame resumes, it can assume the arguments further up the stack have already been consumed and should continue from further down the stack. Though it's not obvious, we'll see in a minute that this behavior is important for supporting tail call optimization.

Whew, one down. Now let's take a look at how Ruby handles things differently in the tail call optimized case.

vm_call_iseq_setup_tailcall

VALUE *argv = cfp->sp - ci->argc;

rb_iseq_t *iseq = ci->me->def->body.iseq;

vm_call_iseq_setup_tailcall starts exactly the same as its counterpart: It creates a pointer to the beginning of the argument vector (argv) of the next iteration of our recursive function and extracts a reference to the instruction sequence struct from the call info struct.

VALUE *src_argv = argv;

VALUE *sp_orig, *sp;

VALUE finish_flag = VM_FRAME_TYPE_FINISH_P(cfp) ? VM_FRAME_FLAG_FINISH : 0;

Though the functions start the same, vm_call_iseq_setup_tailcall soon distinguishes itself with the allocation of a number of additional variables. First, a new pointer (src_argv) is created pointing to the beginning of the argument vector (argv). Next, two pointers (sp_orig and sp) are allocated, but not assigned. Finally, a fourth variable (finish_flag) is allocated and conditionally assigned.

The final variable, finish_flag, is used to allow tail call optimization of special types of stack frames called finish frames. Since we're working with normal method frames, the finish_flag variable can be safely ignored.

cfp = th->cfp = RUBY_VM_PREVIOUS_CONTROL_FRAME(th->cfp);

This is where the cleverness of tail call optimization begins to surface. Whereas the normal recursive strategy continues to accumulate frame after frame, this line begins to demonstrate how an optimized tail recursive call can avoid doing so.

The secret sauce behind the success of vm_call_iseq_setup_tailcall, and tail call optimization in general, is that each iteration actually removes itself from the stack, as part of invoking the next iteration. Since the nature of recursion can make discussion difficult, it's worth taking a moment here for clarity.

The beginning of vm_call_iseq_setup_tailcall, places us at the point in the sequence of events where the current frame, iteration n of Factorial.fact_helper, is preparing the stack for the recursive invocation of iteration n+1 of Factorial.fact_helper. Iteration n, after storing a reference to the argument vector intended for iteration n+1, pops the current stack frame (itself) off of the call stack, effectively removing itself from the stack and giving the appearance that Factorial.fact is the call in the stack before iteration n+1 of Factorial.fact_helper.

In terms of another metaphor, if you think of the factorial calculation as exercise and the call stack as distance traveled, tail call optimization is kind of like a hamster (or Guinea pig) running on a hamster wheel. Though both the hamster and the recursive call are running in place, they both still make progress on the work they are performing. This analogy may also elucidate why tail recursion can be thought of as a special kind of loop construct.

Returning our focus to vm_call_iseq_setup_tailcall, after popping the current frame from the call stack, vm_call_iseq_setup_tailcall then updates the thread's current frame pointer (th->cfp) and the cfp variable to point at the stack frame prior to the invocation of our tail recursive function, Factorial.fact.

Though this mechanism allows tail call optimization to avoid the stack overflows inherent to its counterpart, we will see in a moment that it also has other benefits.

RUBY_VM_CHECK_INTS(th);

This line handles a little extra bookkeeping that tail call optimization in Ruby incurs. Usually, when Ruby switches from one stack frame to another, it takes a moment to check for pending interrupts. However, since the stack frame was manually popped off of the call stack, the check for interrupts must also be handled manually.

sp_orig = sp = cfp->sp;

Though it is pretty clear that this line assigns the sp_orig and sp variables to the value stored in the current frame's stack pointer (cfp->sp) field, keep in mind that cfp now refers to the call to Factorial.fact.

As you'll recall from the normal setup function, before the first invocation of Factorial.fact_helper, the previous frame (Factorial.fact) would have rewound it's stack pointer to the position on the stack that it should resume execution from, which would have been the point on the stack right before the arguments consumed by the first iteration of Factorial.fact_helper. This behavior benefits tail call optimization in a few ways.

First, because the function call that just ended is exactly the same as the one that's being set up, it can be assumed that there's enough room on the stack for the call being prepared. This means that the stack pointer from the call prior to our tail optimized call (cfp->sp) can be used as the starting position for the new stack (sp) thats being assembled.

Second, because the character of the stack is likely consistent for each recursive call, less overhead is required when setting up the stack. For example, earlier I mentioned that the Ruby VM allocates a spot on the stack for special variables that might be used by the function, but that since the function doesn't use any special variables, that field remains nil. Because of the alignment of values on stack from iteration to iteration, that nil field is actually only assigned on the first iteration and on every other iteration the assignment can be skipped because the value is already nil.

The final benefit that comes from being able to reuse the stack pointer from the stack frame prior to our tail optimized call (cfp->sp) is that that same pointer also doubles as a pointer to the place on the stack that our current frame's stack pointer (cfp->sp) will need to be rewound later. To facilitate this usage a reference is set aside in sp_orig for later use.

sp[0] = ci->recv;

sp++;

With this line, vm_call_iseq_setup_tailcall begins to rebuild the stack for the next iteration of the recursive call. To achieve this, it first pushes the receiver of the call (ci-> recv) into the position at the head of the stack (sp[0]), and increments the stack pointer to the next position.

for (i=0; i < iseq->param.size; i++) {

*sp++ = src_argv[i];

}

Next, the function continues by pushing each of the arguments for the next iteration onto the stack. This is where it becomes clear why a reference to the next iteration's argument vector is needed, as the cfp pointer was replaced, and without this reference (src_argv) there'd be no consistent means by which to access those arguments.

This loop is also responsible for the behavior I alluded to above where each argument is written to a consistent position on the stack with each iteration.

for (i = 0; i < iseq->local_size - iseq->param.size; i++) {

*sp++ = Qnil;

}

Consistent with the normal setup function, the tail call optimized setup function also reserves and resets additional space on the stack for the method call as required.

vm_push_frame(th, iseq, VM_FRAME_MAGIC_METHOD | finish_flag,

ci->recv, ci->defined_class, VM_ENVVAL_BLOCK_PTR(ci->blockptr),

iseq->iseq_encoded + ci->aux.opt_pc, sp, 0, ci->me, iseq->stack_max);

The process of pushing a new frame on to the stack is almost exactly the same as in the normal setup function, except for one slight difference: The bitwise logic related to the finish_flag variable is added to allow tail call optimization to be performed on finish frames as we briefly discussed earlier.

cfp->sp = sp_orig;

Last but not least, after pushing the new frame on to the stack, the setup function sets the current frame pointer's stack pointer (cfp->sp) to the point on the stack that it should resume from. In this case, that position matches the original position of the frame's stack pointer which was tucked away in sp_orig for later use.

At this point we're back in sync with vm_call_iseq_setup_normal, but whereas vm_call_iseq_setup_normal would have picked up another stack frame, after some minor stack shuffling, vm_call_iseq_setup_tailcall leaves us right back where we started, but one step closer to the solution to our factorial calculation.

The bends

Wow. I don't know about you, but I didn't expect the bottom to be quite so far down there. Though I'm eager to come back up for air, as are you I'm sure, it's worth deferring our ascent a moment to reflect on what we found in the depths.

Ruby's implementation of tail call optimization emerges from the Ruby VM's stack-oriented nature and ability to discard the current stack frame as it prepares the next frame for execution. Given this design it becomes more clear why tail call optimization is handled by Ruby on the C side instead of on the YARV side since method call setup is below the conceptual level at which YARV tends to work.

In the end, there's a satirical humor in that we had to go to such depths to understand the facilities that allow the Ruby VM to handle tail recursive functions like treading water at the top of the stack.

It's been a long journey, but I hope you learned something along the way, I know I certainly did. Thanks for reading!

(I swear my next post will be shorter!)

-

Ruby's special

$variables are out of the scope of this article, but you can see where the parser defines the various special variables here. ↩